Hi,

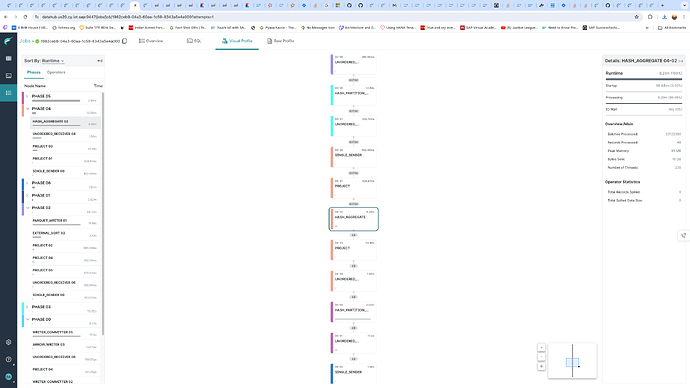

with exec.operator.aggregate.vectorize.use_spilling_operator enabled:

[failure-flag-enabled.zip]

On the source PDS we have a timeseries data for 300 columns and partition by day and hour. We created a raw reflection on view created with aggregate operators min ,max,count…etc at hour level. We are getting the job getting killed closed connection exceptions. It is failing after accumulation of large volumes of data at hash aggregate operator. Disk spill is not happening to keep memory pressure down. Hence which eventually causing Kube process getting killed and restarting the executors.

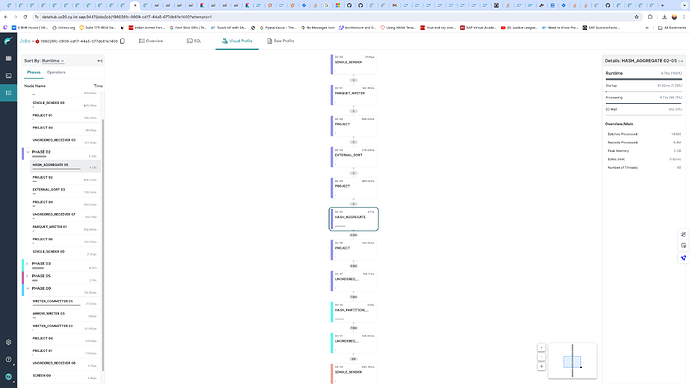

with exec.operator.aggregate.vectorize.use_spilling_operator diabled:

[sucess.zip]

When we ran the same then we dont see any issues and the large volume of data is processed fine in hash aggregate operator in memory.

Let us know is this a known issue. What should be done when we enable the flag exec.operator.aggregate.vectorize.use_spilling_operator to use disk and mitigate issue.

We do see slight performace deltas on eneabling and disabling the flags

dremio version:

24.2.11-202404102036120114-d9c69a0e

Thanks,

Pavan

sucess.zip (1.6 MB)

failure-flag-enabled.zip (1.5 MB)

[@Julian_Kiryakov] [@balaji.ramaswamy] can you investigate the memory issue?.

dd7128a5-bc5b-4b6b-a9ab-af583056c722.zip (1.6 MB)

I dont see any disk spill. This is success case. But there are scenarios we are getting OOM no disk spill happening. Please les us know the config to enable diskspilling for operators.

Here is a scenario where the Hash aggregate operator is failing without using disk spill.

26e4b5db-4b10-41f1-b854-307b2fe75643.zip (729.2 KB)

@pavankumargundeti

The first profiles you uploaded, the failed one seems to have an unresponsive executor situation. The third and fourth profiles have completely different plans. I see a lot of support keys toggled. Let’s do this. Reset the keys below and run the query, if it fails, send the profile

exec.operator.partitioner.vectorize

exec.operator.sort.external.spill.allocation_density

exec.op.join.spill

exec.operator.copier.complex.vectorize

exec.operator.aggregate.vectorize

exec.operator.aggregate.vectorize.use_spilling_operator

You can download the output of sys.options to revert back later. Alternatively, use alter session

Thanks balaji for your initial observations. Attaching the 3 profiles of jobs of reflection failed with OOM. I toggled/reset the needed flags. Let me know your observations on Hashaggregate operator. These reflections does not had issues when exec.operator.aggregate.vectorize.use_spilling_operator false.

a8898afd-9dbf-4ace-a5a0-af8a06b5b76b.zip (946.9 KB)

f6bd1360-f121-48f3-8061-b448891e324e.zip (1.5 MB)

fd25a87f-5f82-423c-b915-7fd19def732f.zip (1.5 MB)

Any update on this @balaji.ramaswamy

Apologize for the delay @pavankumargundeti Will provide an update this week

Thank you waiting for your reply …

When the support option is set to ‘true’, the spillable hash agg algorithm is allocating something called as ‘PREALLOCATED_MEMORY’ and if the query is highly parallelized it could be an issue. I am investigating what this in MB per thread is

There are 1800 accumulators which include min, max, sum, count…

Dremio preallocate a batch of memory in the hashtable as part of initDataStructures… and the batch size is 256k for all 8 partitions for a single accumulator…as there are 1800 accumulators we allocate (256 * 1024 * 1800) = 450MB per operator. which explains the initial prealloc memory required for the operator…this is by design of the Spillable HashAgg operator

@balaji.ramaswamy thanks a lot for your analysis. Is there any way we can reduce the preallocation batchsize and go for DISK for spill?. As this poses more OOM issues and might require more memory for each operator. When exec.operator.aggregate.vectorize.use_spilling_operator is false looks like no pre-alloaction happening hence the reflections are working fine.

@pavankumargundeti 25.x has Memory Arbiter and Join spilling enabled. Can you please run the query on latest 25.0.x with default settings, exec.operator.aggregate.vectorize.use_spilling_operator back to true and send us the profile?

We have to wait as we are using SAG supported dremio 24.3. Not sure when they will update to 25.x. But thanks for the update.

@pavankumargundeti Let me see what other work arounds we can do in the mean time

Thanks

Bali