I get this error on try make a reflection:

Some virtual datasets are out of date and need to be manually updated. These include: "@oluis".vwTempoDosCalculo. Please correct any issues and rerun this query.

What can I do to solve?

@oluis

where this error are visualized? on query time?

on server logs?

can you share query profile?

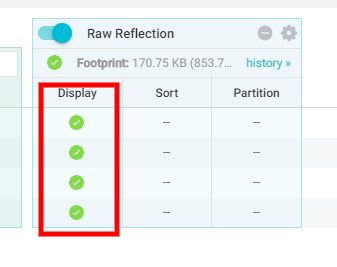

also can you share print screen of reflection status in VDS?

apparently you have a mongo datasource, defined a VDS with some fields, for example field1, field2, etc

then you active raw reflection, but now some of this fields are altered from origin, for example, mixin datatype, or remove some field

How are defined your VDS (the current VDS that throw error and the vwTempoDosCalculovds`)?

also can you share a print screen of reflection definition of both vds?

some as

This is the failed VDS when creating a reflection

This is the origin

My origin comes from an aggregation of a table in mongodb. The table has 2.5 million documents, with aggregation reaching up to 20x more

please share the SQL of the VDS,

apparently some fields are inconsistent could be some of the mixin data types (fields with A# mark)

The selec is only this

SELECT *

FROM "mongo-prod".statistics.vwTempoCalculo

WHERE DATA = CURRENT_DATEplease avoid use * instead always list fields, this can cause inconsistent metadata.

rewrite your query, then retry then execute

alter "mongo-prod".statistics.vwTempoCalculo refresh metadata

then retry to create reflection

We have fixed this behavior in 4.1.8. In 4.1.6, did you run a query on the VDS and did that work?

This helped me to resolve. thanks

Can someone describe how to generalize this for other data sources, is it

ALTER PDS "Source-Name"."FolderSchemaEtc"."TableFileEtc"

Also how does this manual command compare to the Dataset Handling Metadata settings at the source level for refresh for example with Dataset Discovery Fetch every 1 Hour(s) - can I set it to a minute then wait for it to complete and have the same effect, without running a query against any specific PDS?

You should not be running background refresh every minute as that can cause continuous load on the coordinator. Best is to call “alter pds refresh metadata” for affected tables at the end of the ETL pipeline. The background refresh and alter pds refresh metadata are the same where only changed (modified timestamp since last refresh) are refreshed. If we add “FORCE UPDATE” at the end, Dremio will refresh all folder irrespective of timestamp changed or not

Thanks

Bali